Cloudera AI Inference Service GA Onpremise

We’re excited to announce the general availability (GA) of the Cloudera AI Inference service for on-premises deployments. This milestone marks a significant expansion of our private AI vision, allowing enterprises to deploy, scale, and govern the latest generative AI (GenAI) models directly within their own data centers.

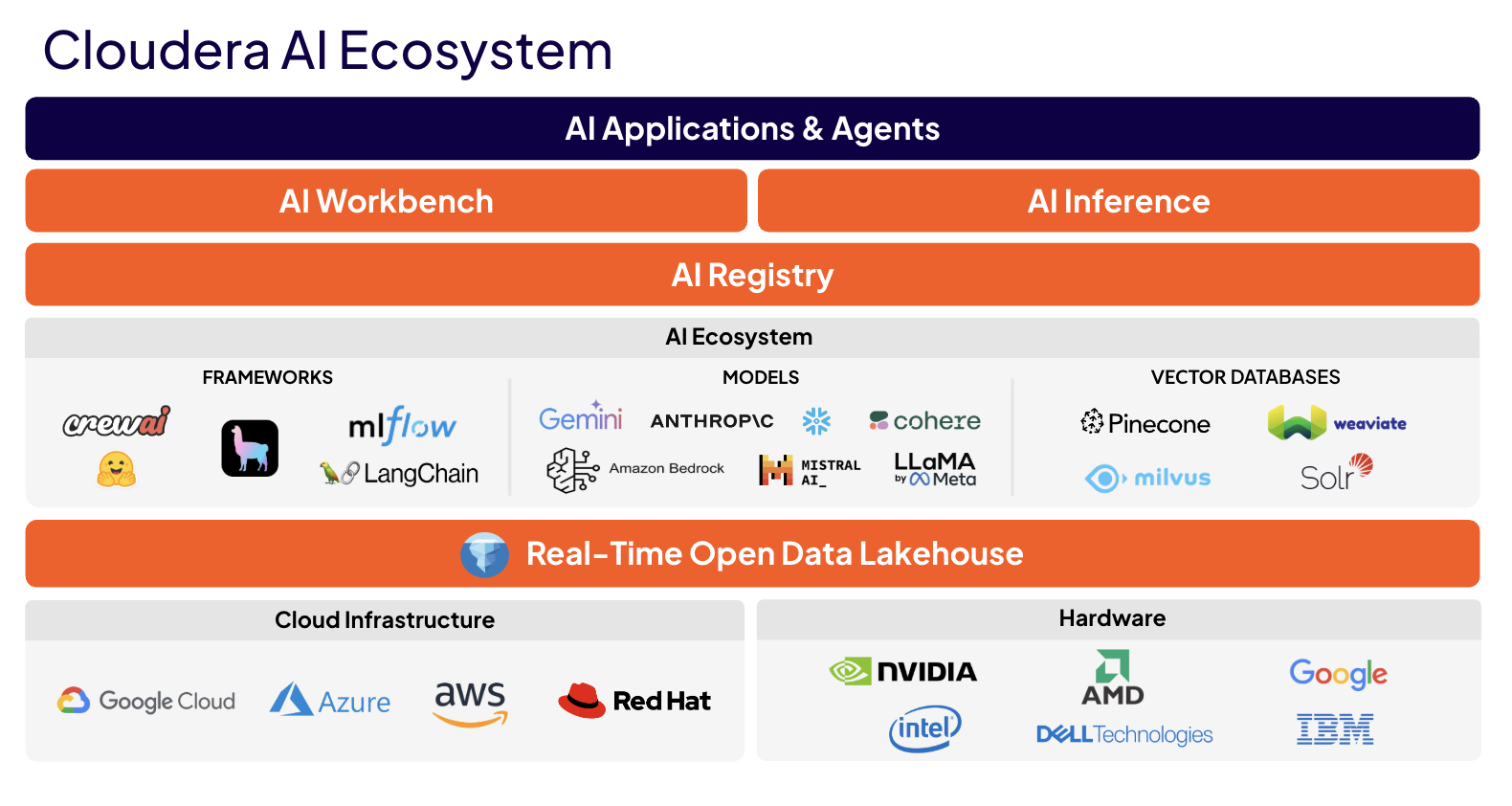

Powered by NVIDIA NIM, the Cloudera AI Inference service provides the control and performance that enterprises need to accelerate innovation without compromising data security.

Empowering True Private AI

Cloudera AI Inference for on-premises is engineered for organizations that demand absolute control over their intellectual property and data sovereignty.

- Complete Data Privacy: Ensure that sensitive data, prompts, and model responses never leave your secure corporate network, meeting strict regulatory requirements.

- Air-Gapped Readiness: Full support for highly secure, air-gapped environments, providing the tools and documentation necessary to import and manage models without external connectivity.

- Storage Flexibility: Full support to any S3-compatible object storage.

Predictable TCO for Production AI

As enterprises move from experimentation to steady-state production, the on-premises service offers a significantly lower total cost of ownership (TCO) compared to token-based cloud APIs*.

The AI Inference on-premises service lowers long-term TCO by providing ownership of GPU infrastructure, which pays off fast when inference happens 24/7.

Feature Highlights for Production-Grade AI

- Optimized Performance: Prebuilt and optimized engines, including TensorRT-LLM and vLLM, ensure low-latency, high-throughput inferencing.

- Seamless Integration: Integrate with existing MLOps workflows and CI/CD pipelines using OpenAI-compatible APIs for large language models (LLMs) and Open Inference Protocol APIs for traditional models.

- Comprehensive Monitoring: Track performance metrics such as latency, throughput, and resource utilization in real-time to ensure operational continuity.

Get Started Today

The Cloudera AI Inference service enables enterprises to achieve high-performance, private AI with a significantly lower TCO than cloud-based APIs. By keeping workloads on-premises, customers ensure absolute data sovereignty and predictable costs for production-scale GenAI.

To get started, visit the Model Hub to select from a catalog of optimized models ready for deployment within your secure environment.

As always, check out the entire DOCS for Cloudera AI.

If you would like a deeper dive, hands on experience, demos, or are interested in speaking with me further about Cloudera AI Inference please reach out to schedule a discussion.